Using and Debugging a Web Font

After making a subset of a TTF font as a new custom Web Font (See Make a Web Font Subset), I moved on to testing and using it.

First of all, I would like to highlight this excellent in-browser font decoding tool:

It’s pronounced, "What can my font do?" and it did the best job of any online tool to display the characters in my font along with other useful information. And it’s visually fun.

Using the font on a web page

There’s two parts: First you define the font-face using a CSS "at-rule":

Then you use the font-face using the regular font-family property.

Here’s an example applying a Web Font to the entire body of the page, with any serif font as the fallback:

@font-face {

font-family: 'My Cool Font';

src: url("cool-font.woff2") format("woff2");

}

body {

font-family: "My Cool Font", serif;

}

(In my case, I want to use a custom font to display Emoji. I’m finding that I

need to use greater specificity to enforce the use of the font, including

things like a <span class="emoji">. Your exact needs will determine how you

want to apply the font. Normal CSS rules apply.)

If dealing with Emoji like me, You might also be interested in this property:

Debugging fonts with the Adobe "NotDef" font

I had a terrible time getting certain Emoji with combined characters joined with a zero-width joiner (zwj) character to show up on Chrome. (I don’t use Chrome, but I still care about the wayward souls who do.)

At first, I thought it was all of the zwj cases, but when I used a Ruby script (check_for_zwj.rb) to print them all out from my data set into a contact sheet, I realized it was more like half of them.

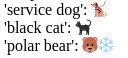

For example, here’s a screenshot of three Emoji glyphs that use two characters joined by a zwj as rendered by Firefox:

And here’s the exact same data rendered by Chrome:

Indeed, a polar bear Emoji is formed by joining bear and snowflake characters with a zwj. But Chrome just renders the separate characters. The service dog (dog + zwj + safety vest) and black cat (cat + zwj + black large square) are formed the same way, but they render fine for some reason.

I wasted a ton of time trying to prove my font contained the polar bear glyph. I figured either my font was wrong or Firefox was misleading me with the Twemoji fallback (which would look identical to the Twemoji subset I was testing!)

It’s hard to believe that Chrome has this font rendering bug for so long. I’ve seen bug reports that might be related, but they’re quite old. But I suppose it’s possible that something specific to this font is, indeed, triggering a Chrome bug. I’ve learned more than I want to about the various tables and properties of fonts on this journey.

It’s hard to know what to trust when there are so many moving parts.

But then I came across a utility font that helped me lay my doubts to rest:

The Adobe NotDef testing font

Adobe NotDef is "a special-purpose OpenType font that is intended to render all Unicode code points using a very obvious .notdef glyph".

Specifically, it draws all characters as a box with an X through it.

As soon as I learned about it, I knew exactly how I could use it to ensure my custom subset web font was for-sure being used without a doubt. Since NotDef covers all codepoints, you can use it as a fallback in your font-family declaration and be absolutely sure the browser isn’t rendering a glyph with a system fallback!

I used fontTools subset (as decribed in my web

font subset card to turn the original 214Kb AND-Regular.ttf file into a tiny

2.7Kb AND-Regular.woff2. (Specifically, I made a "subset" with --unicodes="*"

to include all characters.)

Here’s the file for you to use in your own adventures:

-

AND-Regular.woff2 (2.7Kb)

Here it is in action. This is a completely normal passage of ASCII text:

(You can copy-and-paste it and see that it’s just normal text.)

I used that to test my Twemoji font on this test sheet:

This is how I’m using it as a fallback on that sheet:

<style>

/* custom subset emoji font */

@font-face {

font-family: 'Custom Twemoji';

src: url("Twemoji.Mozilla.subset.woff2") format("woff2");

}

/* Adobe NotDef font */

@font-face {

font-family: 'NotDef';

src: url("AND-Regular.woff2") format("woff2");

}

span.emoji {

font-family: "Custom Twemoji", "NotDef";

}

</style>

Now I know for absolute certain that the polar bear glyph is being served by my subset font and not the browser.

Optional: Specify the range of characters supported by the font

I’m way less confident about this next part, but in theory, it would also be helpful to specify the Unicode codepoint ranges supported by this font so the browser knows to only try to render those characters with it.

(Ideally, specifying the supported ranges would not delay the drawing of other characters until the font has been loaded - but that didn’t work in practice for me. Maybe I was doing it wrong. I don’t know how I could have debugged it more effectively.)

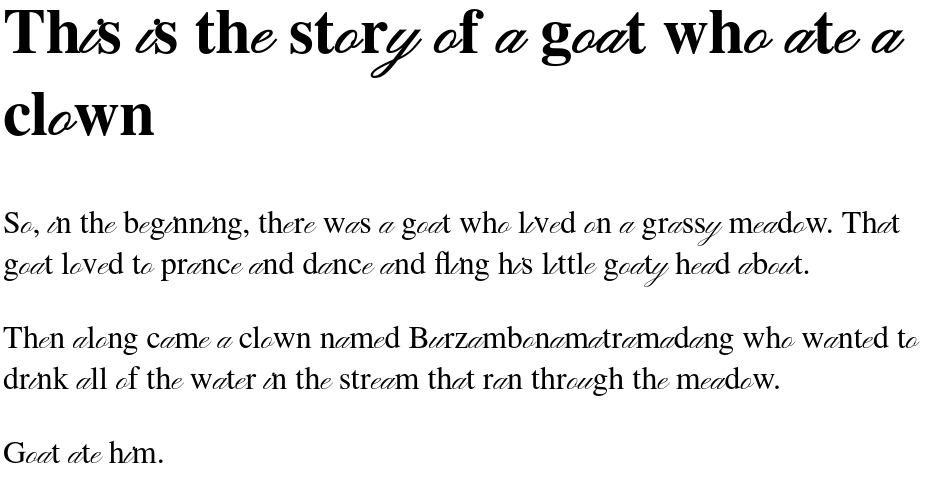

I do know specifying one or more codepoint ranges for a font face works in principle because I made this funny test where I used a script font for just the vowels (the font itself contained all the usual Latin characters):

@font-face {

font-family: 'Pinyon Script';

src: url("pinyon-script.woff2") format("woff2");

/* just the lower case vowels */

unicode-range: U+61, U+65, U+69, U+6F, U+75, U+79;

}

body {

font-size: 2em;

font-family: "Pinyon Script", serif;

}

Screenshot of result:

That’s it. The NotDef font was the only fun part in this particular leg of the journey. I’m just glad to be reaching a fairly satisfying finish line in my Emoji picker saga.