Dave's book review for The Art of Doing Science and Engineering

Author: Richard W. Hamming

Pages: 403

Finished reading: TBD

Back to my books page for more reviews, etc.

My Review/Notes

Hello, this is a work in progress. I’ve finished typing my hand-written notes for the final chapters and am on my second pass through the whole review.

This will be unusually long. I’m doing an informal book club started on Mastodon with Shae Erisson (scannedinavian.com) and Brit Butler (kingcons.io) Here’s the shared space for the book club notes (github.com). I don’t have a GitHub account anymore, so my notes aren’t there, just here.

I think it’s important to note that my impression of Hamming improved considerably by the end of the book to the point where I think I misunderstood some of the earlier chapters. Maybe that’s partially his fault and partially mine, but I will say that if you’re not getting it or not feeling it, sticking it out 'til the end or maybe even skipping some chapters might work for you too.

Contents

(Note that these chapter titles are mine, not the exact originals):

-

Chapter 1 Orientation

-

Chapter 2 The digital revolution

-

Chapter 3 History of hardware

-

Chapter 4 History of software

-

Chapter 5 History of applying computers

-

Chapter 6 "AI"

-

Chapter 7 More "AI"

-

Chapter 8 Think about "AI"

-

Chapter 9 A mathematician’s journey to n-Space

-

Chapter 10 (En)coding theory 1

-

Chapter 11 (En)coding theory 2

-

Chapter 12 Error-correcting codes

-

Chapter 13 Information theory

-

Chapter 14 Digital filters 1

-

Chapter 15 Digital filters 2

-

Chapter 16 Digital filters 3

-

Chapter 17 Digital filters 4

-

Chapter 18 Simulation 1

-

Chapter 19 Simulation 2

-

Chapter 20 Simulation 3

-

Chapter 21 Fiber optics

-

Chapter 22 Computer-aided instruction

-

Chapter 23 Math!!!

-

Chapter 24 Quantum mechanics

-

Chapter 25 Creativity

-

Chapter 26 Experts

-

Chapter 27 Unreliable data

-

Chapter 28 Systems engineering

-

Chapter 29 You get what you measure

-

Chapter 30 You and your research

Forward by Bret Victor

This was excellent! I already knew that Bret (worrydream.com) was a good at explaining things. This lucid introduction to "Hamming on Hamming" is no exception.

(Tangetial note: when I look for PDF copies of influential computer science papers, I’m amazed at how often my search results in a link to Bret Victor’s website.)

Though I later found that Victor was re-stating much of what Hamming himself says about this book in the Preface, Introduction, and Chapter 1, I think Victor’s introduction is more fun to read - and it also has the benefit of being able to talk about Hamming in a way that Hamming himself cannot because he’s the author in question.

Chapter 1: Orientation

The first chapter is really an introduction to the book as a course of study. And the goal is meta-education (don’t forget, the book’s subtitle is "Learning to Learn").

As warned, there is a little math. It’s an example "back of the envelope" calculation of the explosive growth of new information to be studied in each new generation. This math digression relates to the chapter, but the point is not really to drive home how we arrive at some numbers, but rather to illustrate that this calculation is the sort of thing that great thinkers might do.

Given this explosive growth of new information, Hamming instructs us to concentrate on "the fundamentals". And how do we figure out what will be "fundamental" in the future? By trying to predict it!

Of course, no one can predict the future. But by having a personal "vision" of the future, you can steer yourself towards a goal even as new information comes to light. There’s a tension between learning essential new things, but also not chasing every shiny object or fad that crosses your path. (A fact that I think seasoned programmers, in particular, can relate to.)

Hamming also talks a lot about doing "great" work and being "great" I like what Brit wrote about this chapter (github.com) because it echoes a lot of my concerns about what "greatness" sounds like in the year 2025, as opposed to what it sounded like in the era in which Hamming wrote it not that long ago:

"In short, I don’t know that I really care about greatness any more. At least not individually. But I am very interested in how as a culture, it seems we no longer incentivize doing great work or accomplishing hard things as opposed to becoming wealthy (or wealthier). A poor motivator if the goal is innovation or any sort of enduring advancement."

Well said.

Hello, second-pass Dave here. As you’ll see if you make it to the end of my notes, I realize in reviewing the final chapter, that Hamming does not prescribe one single meaning of "great", but leaves it to the reader to define. I don’t claim to know Hamming’s inner thoughts, but this book absolutely makes a case for the reader to make a personal definition of what it means to be "great" and do "great work".

Lastly, having a vision or goal is a way to steer towards "total happiness rather than the moment-to-moment pleasures." And, "a life without struggle on your part to make yourself excellent is hardly a life worth living."

If followed in the pursuit of your (ethically tenable) goals, it’s profound advice to follow.

Chapter 2: The digital revolution

Right in the first sentence, I’m confronted with my ignorance of mathematics and physics. Hamming says,

"We are approaching the end of the revolution of going from signaling with continuous signals to signaling with discrete pulses, and we are now probably moving from using pulses to using solitons as the basis for our discrete signaling."

I had to look up the word soliton.

-

https://en.wiktionary.org/wiki/soliton - "A solitary wave which retains its permanent structure after interacting with another soliton." Hmmmm…

-

https://en.wikipedia.org/wiki/Soliton - "A single, consensus definition of a soliton is difficult to find." Great!

Ha ha, I’m being unfair to the Wikipedia entry. In the first paragraph, it says essentially the same thing as the Wiktionary definition.

Further, it had this nice quoted passage from John Scott Russell writing about his discovery in 1834:

"I was observing the motion of a boat which was rapidly drawn along a narrow channel by a pair of horses, when the boat suddenly stopped – not so the mass of water in the channel which it had put in motion; it accumulated round the prow of the vessel in a state of violent agitation, then suddenly leaving it behind, rolled forward with great velocity, assuming the form of a large solitary elevation, a rounded, smooth and well-defined heap of water, which continued its course along the channel apparently without change of form or diminution of speed. I followed it on horseback, and overtook it still rolling on at a rate of some eight or nine miles an hour, preserving its original figure some thirty feet long and a foot to a foot and a half in height. Its height gradually diminished, and after a chase of one or two miles I lost it in the windings of the channel. Such, in the month of August 1834, was my first chance interview with that singular and beautiful phenomenon which I have called the Wave of Translation."

Solitons appear to come up in a variety of physics settings (fiber optics, magnetics, nuclearreactions, and even a sort of science fiction spaceship warp propulsion known as the "Alcubierre drive" which is a, to some degree, a product of mutual inspiration between Star Trek and physicist Miguel Alcubierre - I’ll have to keep an eye out for that one now that I know about it). But as near as I can tell, we haven’t proven or disproven Hamming’s prediction on this one yet.

Moving on to the second sentence and beyond…

The chapter is about the move from continuous (analog) signaling to discrete (digital) and why this has happened.

The first reason is that analog signals need to be amplified in order to be transmitted over a long distance. I remember reading an anecdote about early attempts to send voice signals across the Atlantic ocean by cable in which, if I remember correctly, the amplification produced a hopelessly messy blast of sound and also melted the cable!

(For the above, am I thinking of the Neal Stephenson article in Wired called "Mother Earth Mother Board" or the Claude Shannon biography I read?)

By contrast, digital signals are vastly easier to transmit long distances because we can clean them up and even correct them for errors as we pass them along, producing an exact copy on the other end.

Also, here’s a fascinating way to phrase a fact about digital information:

"We should note here transmission through space (typically signaling) is the same as transmission through time (storage)."

Oh, and this tantalizing bit:

"Analog computers have probably passed their peak of importance, but should not be dismissed lightly. They have some features which, so long as great accuracy or deep computations are not required, make them ideal in some situations."

I only know a little bit about analog computers that calculate using continuous electrical logic rather than binary/digital logic. And what little I know makes me wonder if they’ll someday make a resurgence in applications where they provide the best low-power solution for a computation? Obviously, we would pair the analog circuits with digital circuits to make a hybrid computer in whichever ways make sense. Also, we can compute with mechanics, fluids, molds, fungus, and folded paper. So who knows what the future will bring?

Second: Digital is cheaper. Especially once we figured out integrated circuits.

TODO: Link to Crystal Fire, once I get that book review up, someday…

Third: Information is increasingly what we work with rather than physical goods. (True and increasingly true!)

Also: Robotic manufacturing and engineering (with ever more complex products that require ever more complex maintenance), increased data-crunching power for simulations used in science, especially really costly stuff like atomic bombs.

Hamming makes a point about over-reliance on simulation in science - he was an early advocate, but he’s well aware that for hundreds of years, "Western" thought centered around too much book learning and not enough direct observation experimentation in the actual world. You always have to go test things in the real world to see if the simulations are correct.

Societal effects, people management, and central planning:

"The most obvious illustration is computers have given top management the power to micromanage their organization, and top management has shown little or no ability to resist using this power."

Hamming goes at length on the evils of micromanagement. I remember that word being used a lot in the late 1990s when I was entering the workforce. I don’t think that’s the biggest problem we’re facing with huge corporations anymore?

Edit: I must also note that I’ve never worked in a company with more than 100 employees. So I have zero first-hand experience with the sort of micromanagement Hamming is talking about here. What I mean by the above is that from the outside, the ills brought upon us by big corps haven’t seemed to be driven by "micromanagement" so much as just sheer, old-fashioned bad ideas, bad leadership, and bad motivations.

Second edit: I’ve talked with two people about this and…the disappearance of "micromanagement" from the common vernacular is, indeed, just my own perception. It’s still just as prevalent as a term, and problem, as it ever was. It’s clear to me now that I was simply applying the term too narrowly.

Another Hamming prediction caught my eye, but Shae expressed it best (github.com) with:

"…he suspects that large companies will lose out to smaller companies. […] because smaller companies have less overhead and their top management is not distant from the “people on the spot” […] If Hamming is right, why are there still big companies at all? Maybe Hamming didn’t consider that large companies could afford to purchase and consume their smaller competitors?"

Entertainment: Well, computers now completely dominate here and Hamming has been proven 100% correct. I’m fascinated (and, if I’m honest, dreading) the "AI" stuff he hints will be coming in Chapters 6-8. But it will be interesting since he wrote this in 1996!

Warfare: Hamming writes:

"It is up to you to try to foresee the situation in the year 2020 when you are at the peak of your careers. I believe computers will be almost everywhere since I once saw a sign which read, 'The battle field is no place for the human being'."

Though we’ve had remotely-piloted and autonomous military aircraft for quite a while now, I’m writing this in 2025, at which point we are at the beginning of a whole new chapter of warfare: with inexpensive quadcopter and water craft "drones" boats accounting for a huge percentage, perhaps even the majority (?), of strikes in the defense against the Russian invasion of Ukraine. The potential is terrifying. But maybe this stuff will save lives in the long run? I have no idea.

Back-of-the-envelope calculation break! Hamming does some calculus here, which I have to assume is really great and totally satisfying if you’re into continuous mathematics. I’ll be perfectly honest. I’ve never found modeling rates of growth to be all that interesting. Especially something as abstract as "innovation". Actually, the more I think about it, I find this particular exercise actively boring, in a way that has nothing to do with not being able to easily follow the mathematics. I’m sure others will enjoy it.

Lastly, Hamming makes a recommendation/prediction: General-purpose ICs will tend to be a better choice than specialized custom ICs. This is based on the rate of innovation, the advantages of shared knowledge, and the price advantages of scale. Good job, Hamming! Absolutely correct. In 2025, there are a vast number of different microcontrollers and other ICs available off-the-shelf and it is extremely rare to need something truly custom except at enormous scale.

I’m excited about the next chapter, which will be about the history of computers!

Chapter 3: History of hardware

Here we go!

I know of the abacus (I actually had one as a kid, but at that age, I didn’t have the patience to understand the little instruction book that came with it, so I just clicked the little beads).

But "sand pan" is new to me… I can’t find any reference to that type of device, on Wikipedia, nor even Marginalia Search (marginalia-search.com), which has the rare distinction of performing real "exact match" web searches. (I’ve only turned up a military operation of that name, gold panning equipment, geological surveys, a child’s craft activity for Biblical studies, and barbecue techniques.)

Hamming says the next big leap in calculation came from John Napier’s invention/discovery of logarithms (in the form of tables), and from there, the slide rule.

I find the subject of logarithms to be quite fascinating and I’ve long felt that I should make a study of them specifically because of how often they come up in computer science and the history of computation (the "big-O" notation, slide rules, etc.). So I’ve made a stub card to expand at some later date: Logarithms.

Next come the mechanical computers - typically very specialized for a particular task. I find these fascinating as well. Mechanical comptuers always make me think of The Clock of the Long Now (wikipedia.org), which contains a mechanical computer to convert the pendulum movement to the display of astronomical phenomena and the year according to the Gregorian calendar.

One of the interesting things about the Clock of the Long Now is that they tried lots of different designs for the mechanical computer, but ended up with digital mechanics for a lot of the same practical reasons digital electronics and digital information are so much handier than analog equivalents. And this is something that Hamming mentions here - that mechanical computers have been both "analog" and "digital" since the beginning. For example, Napier not only developed a logarithmic method of calculating, but his "Napier’s Bones" (ivory rods) were a digital calculation system.

There’s Babbage in the 1820s, of course.

New to me was the Comptometer (1887) by Dorr Felt. I thought the whole Wikipedia entry for the Comptometer (wikipedia.org) was really interesting:

"A key-driven calculator is extremely fast because each key adds or subtracts its value to the accumulator as soon as it is pressed and a skilled operator can enter all of the digits of a number simultaneously, using as many fingers as required, making them sometimes faster to use than electronic calculators."

Also interesting to me were the details about the Comptometer’s error detection and locking parts of the keyboard until errors were manually corrected.

There was a veritable explosion of these calculating machines in the late 1800s, and the Comptometer Wikipedia entry has a timeline with the dates.

Punch cards come from the mechanical computer/calculator era for storage of data and rudimentary programming. I’m a little surprised Hamming didn’t mention the Jacquard loom, which used punched cards in the 1700s (but, of course, you can’t mention everything in a short chapter!)

Side note: I once visited a textile museum in Rhode Island, where they had a working punchcard-driven loom that could produce woven bookmarks with letters that spelled out the museum name. I bought one and I’ve got that souvenir around here somewhere.

IBM (International Business Machines!) dominated in the early 1900s with punch cards and mechanical calculating machines.

Okay, I’d better shorten up these notes - I find all of this stuff super interesting, but if I dive into any of it, I’ll never finish my notes or this chapter: Zuse (electrical relays), ENIAC (vacuum tubes), etc.

Okay, I know what I wrote above, but here’s another quick note because I simply can’t help myself: After reading this book, but before finishing this page, I read a book about Grace Hopper, who got her start programming Howard Aiken’s Mark I mechanical computer wikipedia.org link. The book focused on Hopper, not the Mark I, but it still contained a bunch of fascinating facts about the computer. For one, it was electromechanical and ran off of a drive shaft, which limited the calculating speed of the machine to the RPM of the shaft! The other interesting thing about it is that it was arguably more "programmable" than the ENIAC! This is a contentious statement, but the ENIAC required "programming" by extensive physical re-wiring with a plugboard and switches, whereas the Mark I took its instructions from punched paper tape.

Now Hamming puts up an interesting table with the comparative speed of operations per second. His table ends in the era in which he was writing the book - the early 1990s. He’s using operations per second, but we can probably substitute floating point operations per second (FLOPS) for any of the modern stats. From hand calculators (20 OPS) to 1990s digital supercomputers (10^9 OPS/FLOPS or gigaflops).

For a fun comparison, the old-ish Ryzen 5 CPU in the desktop I’m typing this on benchmarks at about 450 gigaflops and you can buy one right now for about $80.

And he makes a prediction that we can check (now that per-processor speeds have been slowing considerably):

"Even for von Neumann type machines there is probably another factor of speed of around 100 before reaching the saturation speed."

Well, a factor of 100 on top of 10^9 would be 10^11, right?

So how did we do?

The Supercomputer (wikipedia.org) entry has us at 10^18 FLOPS. But it’s really not that simple because at some point we just started making supercomputers as huge clusters of Linux machines running on x86 (or ARM) CPUs, and various GPUs. To some degree, this is pretty much just a ton of consumer-grade processing done in massive parallel.

Anyway, I reckon we got a lot more than a "factor of 100" out of this architecture on the high end of parallel processing supercomputers.

This stuff is hard to compare, actually, but Hamming puts it well in the next bit, "the human dimension," when he writes:

"…Thus in 3 seconds a machine doing 10^9 floating point operations per second (flops) will do more operations than there are seconds in your whole lifetime, and almost certainly get them all correct!"

To hammer the point home further:

"The purpose of computing is insight, not numbers."

To ensure everyone is "on the same page," so to speak, he reduces the modern design of computing hardware to: Storage (of instructions and data), Control Unit (gets and executes instructions), a Current Address Register, an ALU (does the actual calculating), and I/O for communicating with the outside world.

That is, the CPU is just a machine that operates on instructions. At this point, he gets slightly philosophical and makes vague threats about those looming "AI" chapters to come.

That’s it for Chapter 3. I’m going to have to be really careful about keeping my notes shorter because the next chapter is the software portion of computer history, one of my passions!

Chapter 4: History of software

Let’s see if I can stay true to my word and keep this chapter’s notes short.

This is a great summary of the really early history of software development back to the days of programming in binary with absolute machine addresses by someone who was there while it was happening.

By the time I started programming computers, "spaghetti code" was used to mean "too many GOTOs". But Hamming clearly demonstrates how the original pasta was made by patching absolute memory addresses!

The lesson from this chapter is clearly to not resist obvious tooling improvements. Writing of programmers who clung to extremely laborious manual machine code programming versus languages like FORTRAN:

"First, it was said it could not be done. Second, if it could be done, it would be too wasteful of machine time and capacity. Third, even if it did work, no respectable programmer would use it—it was only for sissies!"

The thing is, I know I should be laughing at these old dinosaurs: "Ha ha, how silly! The fools couldn’t see how the computer could assist in programming computers!"

But here I am in the accursed year 2025 and it’s being seriously suggested that all programming (and every other creative endeavor, for that matter) should be completely turned over to so-called "AI" cloud services.

And I just can’t laugh at those poor old manual coding dinosaurs anymore. I feel…deeply sympathetic for them.

Moving on, I totally agree with Hamming’s opinion about the proven success of human-oriented programming languages that are designed to help humans accomplish the task rather than stick with some sort of logical purity. Though I have yet to be convinced that a "natural language" angle has ever been a good way to bridge the gap!

I’ve never heard anyone express this sentiment about Algol before:

Algol, around 1958–1960, was backed by many worldwide computer organizations, including the ACM. It was an attempt by the theoreticians to greatly improve FORTRAN. But being logicians, they produced a logical, not a humane, psychological language and of course, as you know, it failed in the long run. It was, among other things, stated in a Boolean logical form which is not comprehensible to mere mortals (and often not even to the logicians themselves!).

Edit (with rant): After discussing this part on Mastodon, I found myself getting increasingly hot and bothered by the implications underlying Hamming’s opinions about computer programming. In short: A programming language should be designed for humans.

Oh, I agree completely.

But if you read the surrounding context, he goes further than that. What I think he’s really asking for is a programming language (or interface) for laypeople, not professional programmers.

And yet. And yet, Hamming also uses mathematical notation throughout this book and clearly exults in the "deep insights" of a mathematical understanding of a problem.

So which is it, Richard, are we always allowed dense notation for rigorous logical thinking or not?

Or is specialized notation just reserved for "real thinkers" like, oh, you know, serious mathematicians and scientists like yourself, Hamming, my beautiful ham-man?

Wait, but please don’t get me wrong here.

To be clear, I love the idea of making programming accessible to non-professionals. I truly believe programming of some sort is the only way to make full use of a computer. But I also think professionals and power users should have access to "sharp tools" that require and reward skill.

I thought this was really interesting and insightful:

The human animal is not reliable, as I keep insisting, so low redundancy means lots of undetected errors, while high redundancy tends to catch the errors.

That is, programming languages with high information density (low redundancy) are hard for humans to get correct: our natural language is very squishy, but highly redundant, so the signal gets through.

So how do you design a programming language with some redundancy to make the programmer’s intent clear? He mentions an anecdote where he approaches a language expert about the, "redundancy [we] should have for such languages":

…he could not hear the question concerning the engineering efficiency of languages, and I have not noticed many studies on it since. But until we genuinely understand such things—assuming, as seems reasonable, the current natural languages through long evolution are reasonably suited to the job they do for humans—we will not know how to design artificial languages for human-machine communication.

I think that’s spot-on.

Also spot-on are these two conflicting ideas:

-

"Getting it right the first time is much better than fixing it up later!"

-

"The desire that you be given a well defined problem before you start programming often does not match reality…"

I do think comparing writing software with writing novels is a pretty decent comparison, though maybe not for the exact reasons he had in mind. (Source: I write software almost daily and have written two really terrible unpublished novels, ha ha.)

Hamming concludes this chapter on a sour note:

Does experience help? Do bureaucrats after years of writing reports and instructions get better? I have no real data but I suspect with time they get worse! The habitual use of “governmentese” over the years probably seeps into their writing style and makes them worse. I suspect the same for programmers! Neither years of experience nor the number of languages used is any reason for thinking the programmer is getting better from these experiences.

Ouch!

Hamming’s actual experience with the field of programming languages seems to be rooted in the FORTRAN era (1950s), with exposure to the Ada era (1980s). So I do take his opinions about the field with a grain of salt.

Chapter 5: History of applying computers

Hamming’s anecdote about how learning to give good presentations lead to having insights into the future of computing is really good.

The talk also kept me up to date, made me keep an eye out for trends in computing, and generally paid off to me in intellectual ways as well as getting me to be a more polished speaker. It was not all just luck—I made a lot of it by trying to understand, below the surface level, what was going on.

He describes having to do extremely difficult mathematical work on barely capable early hardware to prove that computers could do work that wouldn’t otherwise feasible at all…

…Then, and only then, could we turn to the economical solutions of problems which could be done only laboriously by hand!

I think this is an aspect of computers which is all too easy for those of us born after these machines have been at least somewhat commonplace: That their true value is to make things practical which would otherwise not be practical…or even possible in a human lifetime.

A human could probably render a single frame of Donkey Kong by hand on paper. But a human could never render a single frame of, say, Doom 3.

And before we had Doom 3, we had long ago proven that we could do extremely difficult nuclear simulations on computers.

Here’s a heck of a quote:

In such a rapidly changing field as computer software if the payoff is not in the near future then it is doubtful it will ever pay off.

Incremental improvement is great (awesome, actually), but I do have to agree that the initial idea has to pay off pretty quickly…or it probably never will.

I’m struggling right now to think of notable exceptions to this!

Aside: Now I find myself thinking about Tim Berners-Lee being able to demonstrate his hypertext concept, the World Wide Web within about a year or two and it taking off like a rocket immediately afterward. By comparison, Ted Nelson’s similar Project Xanadu has been under continuous (I think?) development for 60 years and most people have never heard of it and probably never will. (Note: I’ve long been fascinated by Xanadu’s ideas, especially its advanced linking and the transclusion of virtual documents.) Actually, this particular cautionary tale has all sorts of lessons that would be totally ripe for a whole article of their own…the value of ideas without an implementation, dreams versus pragmatism, "worse is better", etc.

Aside: And I really can’t help thinking about hype-driven "payoff" versus natural grass-roots "payoff". For example, Java had massive hype and was everywhere at one time and it did find a niche eventually (especially the JVM). But it did not take over the way it’d been hyped to do. In fact, and with great irony (given its marketing-driven name), I would argue that JavaScript is now the language of the universal machine that did take over, the Web browser, not Java and the JVM!

Ah jeez, now I’m rambling. Keep it short, keep it short.

Hamming, by his own admission, revisits Chapter 2 in recommending the use of general-purpose computers and software. But I take his point: this was once a much more controversial suggestion and one can extrapolate a lot of good ideas about what the future may hold if you trace a line from special-purpose calculating machines through the world of virtual machines and software of today. Where does that line lead in the future?

Chapter 6: "AI"

I’m so raw from the "AI" talk these days that I see the upcoming three "AI" chapters and I groan. But, of course, Hamming’s perspective on this from the 1990s should be fascinating.

What sort of things can computers do? Newell and Simon’s puzzle solving at RAND is something to look into. "Expert systems" are a term I remember from the 1990s, even though I wasn’t in the field.

The idea is you talk with some experts in a field, extract their rules, put these rules into a program, and then you have an expert!

Followed by this shot across the bow:

…in many fields, especially in medicine, the world famous experts are in fact not much better than the beginners! It has been measured in many different studies!

Ouch! Now do the study on engineers and mathematicians!

The impression I’m getting is that Hamming doesn’t have much respect for fields and abilities outside of his own. I would have fallen for this bait, hook, line, and sinker when I was younger.

He then claims that some rules-based systems have "shown great success". I’d be curious to hear an example. I’m not saying it doesn’t exist, I’d just like an example. Maybe that’s coming up?

In Chapter 1, I already brought up the topic that perhaps everything we “know” cannot be put into words (instructions)— cannot in the sense of impossible and not in the sense we are stupid or ignorant. Some of the features of Expert Systems we have found certainly strengthen this opinion.

Here, I think Hamming may have hit the nail on the head. I keep finding reasons to cite Gilbert Ryle’s work by way of Peter Naur, which is exactly about this question (and maybe Hamming was inspired by it?). Here’s what I wrote most recently: Go read Peter Naur’s "Programming as Theory Building" and then come back and tell me that LLMs can replace human programmers.

Another bang-on quote:

While the problem of AI can be viewed as, “Which of all the things humans do can machines also do?” I would prefer to ask the question in another form, “Of all of life’s burdens, which are those machines can relieve, or significantly ease, for us?”

Yessss!!!!

I would go post this quote on Mastodon right now, but half of us are sick out of our minds from being bombarded by this subject non-stop from all sides and I try to limit how often I’m part of the problem.

Next is some fairly standard philosophy about what "thinking" really means and if it would ever be possible for a machine to "think". I think it’s a good summary.

I did give a dark chuckle at this:

I can get a machine to print out, “I have a soul”, or “I am self-aware.”, or “I have self- consciousness.”, and you would not be impressed with such statements from a machine.

Oh Hamming, if I could only transport you to the year 2025, when people are very impressed indeed by such statements.

The next (very) brief "AI" history and conceptual lesson is useful. And Hamming asks good questions at each step - none of which are really answerable in any definitive way!

I’m really curious where the next two chapters will take us…

Chapter 7: More "AI"

Some people conclude from this if we build a big enough machine then automatically it will be able to think! Remember, it seems to be more the problem of writing the program than it is building a machine, unless you believe, as with friction, enough small parts— will produce a new effect—thinking from non-thinking parts. Perhaps that is all thinking really is! Perhaps it is not a separate thing, it is just an artifact of largeness.

And there you have our current dilemma. (Well, one of them!)

I’ve been emailing someone smarter than myself who perfectly summed up the whole idea above as a question of "scale invariance". That is, if scaling a system up doesn’t change its properties, it’s scale invariant (fractals are a fun example). If LLMs are scale invariant, you’re not going to have intelligence pop into existance just by making them bigger.

(Although, we obviously can get systems that are capable of printing, "I am not scale invariant." See previous chapter notes.)

Then a discussion of the usefulness of computers and digital instruments in the field of music production, which I think makes some good (if simplistic) points. Then:

This is the type of AI that I am interested in—what can the human and machine do together, and not in the competition which can arise.

But he then has some pretty dismal things to say about computers competing with people anyway (for jobs) and the ability of your average "coal miner" to elevate to the types of work that a computer won’t replace.

Of course robots will displace many humans doing routine jobs. In a very real sense, machines can best do routine jobs thus freeing humans for more humane jobs.

I don’t think even Hamming could have foreseen that "the computers" would have come for the creative work before doing the menial work - because it turns out that’s easier to fake. :-(

He again stabs at the medical profession, suggesting that doctors could likely be replaced with expert systems eventually. I think he’s doing the classic engineer underestimation of the huge complexity of squishy real-world situations. I recognize it because I used to do it myself all the time and I know I was probably insufferable because of it.

To give Hamming credit, he mentions the legal problem: Who is to blame when a program makes a mistake? That’s an important question. But I don’t think he had the imagination or foresight in 1994 to ask the next question: Would you change your answers to any of the questions if you knew the organization using the program would do ANYTHING to make a short-term profit and would be delighted to find a way to shift blame away from the organization? (If my younger self could have heard me now. You have no idea.)

If you have gone to a modern hospital you have seen the invasion of computers—the field of medicine has been very aggressive in using computers to do a better, and better job. Better, in cost reduction, accuracy, and speed. Because medical costs have risen dramatically in recent years you might not think so, but it is the elaboration of the medical field which has brought the costly effects that dominate the gains in lower costs the computers provide.

Oh Richard Wesley Hamming, my sweet summer child. If I could transport you thirty years forward to a hospital in this country now…

But, of course, computers can be enormously useful, helpful, and wonderful. I wouldn’t love them if it weren’t so. But they must always, always, always be made to serve the user.

Computers can be helpful with calculus.

Some good practical points about robots!

He’s right about chess-playing computers eventually dominating. But this is a great quote:

But often the way the machine plays may be said “to solve the problem by volume of computations”, rather than by insight— whatever “insight” means! We started to play games on computers to study the human thought processes and not to win the game; the goal has been perverted to win, and never mind the insight into the human mind and how it works.

Wow, Hamming is all over the place with this chapter, bouncing around from idea to idea with no clear connection. We’re back to philosophy.

This is a big WTF for me:

As another example of the tacit belief in the lack of free will in others, consider when there is a high rate of crime in some neighborhood of a city many people believe the way to cure it is to change the environment—hence the people will have to change and the crime rate will go down!

Is he saying that there’s crime in a particular neighborhood because the people who live there are…just bad? I mean, I get the "free will" argument he’s going for, but I don’t think the argument makes any sense. Outside of horror movies, there aren’t just whole neighborhoods of bad people that exist for no external reason. (Again, if my younger self could hear me now… oh boy, what a shock.)

This paragraph near the end of the chapter has taken on a completely inverted meaning to me in 2025:

The hard AI people will accept only what is done as a measure of success, and this has carried over into many other people’s minds without carefully examining the facts. This belief, “the results are the measure of thinking”, allows many people to believe they can “think” and machines cannot, since machines have not as yet produced the required results.

The inverse is just as true: "…allows many people to believe machines can “think” since machines have produced the [appearance of the] required results."

Can’t argue with this:

We simply do not know what we are talking about; the very words are not defined, nor do they seem definable in the near future.

Chapter 8: Think about "AI"

This chapter is just a couple pages with a summary of the previous two in the form of a 9-point self-evaluation.

People are sure the machine can never compete… It is difficult to get people to look at machines as a good thing to use whenever they will work… It is the combination of man-machine which is important, and not the supposed conflict which arises from their all too human egos.

What I find fascinating is how much my personal feelings differ from what they would have been 10 years ago. Maybe even as little has 5 years ago? I would have been cheering all of this stuff on.

The machines aren’t the problem. Truly, they are just tools.

The problem, as ever, is who owns those tools and how those tools are used for or against people.

But I have to give Hamming full credit. The "machine learning" or "AI" field has turned out to be a big deal after all. And I don’t think that was obvious to everyone in 1994. I’m not 100% sure where the "AI" hype cycle was in the early 1990s, but I don’t think it was doing well? I imagine he was swimming upstream with these opinions and in the face of all the previous failed "AI" endeavors over the years.

Chapter 9: A mathematician’s journey to n-Space

Here’s some math. Hamming and the word "obvious":

| Avg. Chapter | Chapter 9 | |

|---|---|---|

| "obvious" | 45 / 30 = 1.5 | 2 |

| "obviously" | 23 / 30 = 0.767 | 3 |

Where am I going with this?

I have no idea.

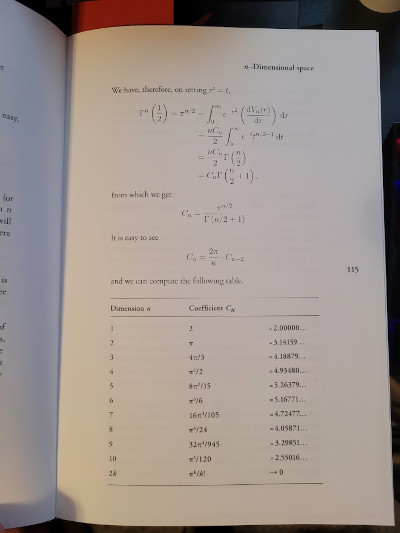

I read every word of it, but I really don’t understand what’s going on here - and I don’t think it’s just my inability to divine insights from a page that looks like this:

I mean that’s also true. But I literally don’t understand the significance of the volume shrinking in relation to the surface area of a higher-dimensional sphere:

This has importance in design; it means almost surely the optimal design will be on the surface and will not be inside as you might think from taking the calculus and doing optimizations in that course. The calculus methods are usually inappropriate for finding the optimum in high dimensional spaces. This is not strange at all; generally speaking the best design is pushing one or more of the parameters to their extreme— obviously you are on the surface of the feasible region of design!

I took Hamming at his word in the introduction in which he wrote:

If you find the mathematics difficult, skip those early parts. Later sections will be understandable provided you are willing to forgo the deep insights mathematics gives into the weaknesses of our current beliefs. General results are always stated in words, so the content will still be there but in a slightly diluted form.

Is this chapter one of the "early parts"?

If I don’t respond to "obviously" with, "Yes, of course, Hamming, my good man, it’s a as clear as day!" does that mean I am meant to just skip it?

On one hand, I don’t think the mathematical insights in this chapter are translating very well to prose. It’s not that I don’t believe they exist, it’s just that I don’t think Hamming has succeeded in conveying them to me. And I think I did my part as a reader. On the other hand, I’m completely willing to accept that Hamming’s intended audience is, after all, post-graduate engineers and I’m not one of those. So maybe it will all be explained later and I’ll feel better about this.

(I also realize I’m outing myself as one of "those" programmers who doesn’t do

some napkin calculus before writing a for loop. Yeah, sorry, guilty as

charged.)

But you know what? I am curious how this higher-dimensional thinking will be applied later. I just hope I can understand it.

And, even better, he teased "Hamming distance". That sounded familiar. I looked it up and was pleasantly surprised learn that it’s a concept I am familiar with: edit distance (wikipedia.org). It just happens that the metric I’m used to is Levenshtein distance (1965). Since Hamming distance only allows substitution, it can only compare strings of the same length. Levenshtein allows deletions and substitutions - so it has no such limits for comparing the similarity of strings. Hamming introduced his distance metric in 1950.

I’m really looking forward to the information theory stuff in this book. It looks like that may begin in the next chapter?

Chapter 10: (En)coding theory 1

Yay! Something I understand!

"Coding" in this chapter means encoding and decoding data for transmission or storage. And coding theory has its own Wikipedia page:

It’s about the ways in which we can present a stream of data: in fixed or variable-length chunks and with or without prefixes. I feel quite at home with this subject right now since I’m working on an Emoji picker utility and am neck-deep in Unicode, UTF-8 encoding, and multi-character sequences.

Hamming discusses Kraft and McMillan:

Which…uh…yeah. I’m sure that’s very nice.

This page is helpful to explain what is being discussed, though:

It just means that you can tell when a new "word" in the encoding is coming because the start sequence (prefix) can’t happen anywhere else (it isn’t valid inside of any other word.)

At an extreme end, a prefix of 0 marks the division of words in this

binary sequence. The only thing we can encode are different quantities

of 1 following the 0.

0111101111111110111111111

The Kraft sum is pretty cool, actually, since it tells you if your encoding is as efficient as it can be.

A perfect equality would be a binary encoding of five "words" like so:

word1 = 0 word2 = 10 word3 = 110 word4 = 1110 word5 = 1111

Nothing is wasted. The first word is a single bit. And even though it doesn’t

terminate with a 0, we know 1111 is word5 because there is no other valid

sequence with four `1`s.

I’m curious where this is going in terms of the "Learning to Learn" subtitle’s premise.

Chapter 11:(En)coding theory 2

I keep telling myself I’m going to get quicker at reviewing this book and I think I actually will speed up quite a bit starting now.

Part of the reason I think I’ll be speeding up is that the chapters in the middle part are fairly uniform: they explore Hamming’s "happy places" - the topics he knows best and to which he has contributed the most. His claim is that he’s demonstrating the "style" of thinking that results in great engineering work (and "Learning to Learn"). It’s hard to argue with someone who did, indeed, accomplish many foundational things in the field!

Another, less pleasant, reason is that it gets increasingly math-heavy and I’m afraid I just don’t have the classical engineering background to appreciate Hamming’s gems. Pearls before swine and all that. Oink.

But this chapter isn’t all that math-heavy. It’s reasonably interesting with explanations of Huffman codes, ISBN encoding, and such.

But the most interesting part, to me, is the final paragraph, which I’ll quote in whole because I think it’s quite good and worth thinking about for everyone who makes software intended for human use!:

"When you think about the man-machine interface one of the things you would like is to have the human make comparatively few key strokes—Huffman encoding in a disguise! Evidently, given the probabilites of you making the various branches in the program menus, you can design a way of minimizing your total key strokes if you wish. Thus the same set of menus can be adjusted to the work habits of different people rather than presenting the same face to all. In a broader sense than this, “automatic programming” in the higher level languages is an attempt to achieve something like Huffman encoding so that for the problems you want to solve require comparatively few key strokes are needed, and the ones you do not want are the others.

Chapter 12: Error-correcting codes

Hamming says it explicitly in the first paragraph of this chapter. Yes, this chapter is about his most famous discovery, the Hamming error-correcting codes. But it’s also about the discovery itself, and the process that probably underlies most discoveries - loading tons of information and ideas into your head, thinking really hard about them for days, weeks, months, or even years on end. And then having sparks of inspiration from the subconcious mind in dreams, in the shower, or in the back seat of a company mail delivery car (Hamming’s own story).

Hamming’s description of his discovery is very dense and I had to read it carefully, but I like the accompanying graphics of a square of intersecting bits, a triangle of bits, a cube. After that, he’s talking about n dimensions.

It’s clever and creative thinking, but Hamming wants you to know it took half a year and he had uniquely primed his mind for the challenge before it came along. I think that is the right way to think about it. And, has Hamming points out, it follows the pattern of other great discoveries in math and science.

Chapter 13: Information theory

Right off the bat, I’m liking this:

Information Theory was created by C.E.Shannon in the late 1940s. The management of Bell Telephone Labs wanted him to call it “'Communication Theory” as that is a far more accurate name, but for obvious publicity reasons “Information Theory” has a much greater impact—this Shannon chose and so it is known to this day.

The name "information theory" never made sense to me, so I’m pleased that Hamming and others felt it should be called "communication theory" instead! At least now I know I’m in good company on that one.

(Although, it’s interesting to note that Shannon’s major Bell System Technical Journal article from 1948 is titled A Mathematical Theory of Communication (wikipedia.org).)

Shannon’s definition of information involves surprise (Hamming’s word, which I like), or "information entropy" (Shannon’s).

Other major contributions from that paper:

-

A formal definition of redundancy (which goes hand-in-hand with the average entropy per symbol)

-

Statistical limits to data compression

-

The word "bit"! (Shannon credits mathematician John Tukey)

After the first page, we’re treated to some pages of math, which I’m sure is very interesting and enlightening if you can read it.

Anyway, why does Hamming bring up information theory? Honestly, I kinda lost the plot. But Hamming tells us: It’s to warn you about initial definitions and finding what you want to find.

For example, he make a good point about so-called "IQ" tests: Defining IQ is completely circular. The test can be make internally consistent, but it can only measure what it was designed to measure! Hamming considers testing in the "soft sciences" to be hopeless and I think he’s got a good point with this one.

Chapter 14: Digital filters 1

Hey, you know who knows a lot about digital filters? Richard Freaking Hamming! Oh boy!

First, we’ve got a story about how Bell Labs had lost engineers who didn’t want to switch to the new-fangled analog computers. Then they lots more engineers when they switched to digital computers. The engineers, you see, were entrenched in the old ways and didn’t want to learn the new stuff!

I gotta say, reading this was a really weird experience for me. I’ve been in my programming career for over 25 years now and…I identify with those poor disgruntled engineers! I’m tired of thinking about and writing about what’s happening in the software industry in the year 2025, so I’m not going to draw the explicit parallel for you, but, you know what I’m talking about, right?

At this point, I 100% completely get why somebody would just say, "screw it" and leave when things shifted away from the skill you’ve built up your entire adult life.

Of course, with the benefit of hindsight and the circumstances of my place in history, I think digital computers are great. It’s possible to see, in hindsight, how the advantages of digital computing overtook analog methods. But rather than thrill in the prospect of what that means for our future, I’m now repulsed. I’m genuinely curious what Hamming would say to all of…this?

Moving on, Hamming tells an amusing anecdote about the time he talked an expert into writing a book about digital filters and how, over time, Hamming ended up being the author of that book.

I also appreciated that Hamming took the time to figure out the underlying mathematical basis for analyzing signals on his own. This allowed him to make connections or have intuitions that other engineers missed.

And we end the chapter with half a dozen pages of math.

Chapter 15: Digital filters 2

Like the previous chapter, Hamming starts by reminding us that sometimes an improvement of a process by an order of magnitude doesn’t just produce a better process, it allows a whole different type of process.

I know this to be true. When I reduce the friction in my own personal projects, it doesn’t mean I’m faster at the project - it means I will actually do that project versus not do it when the friction is too high.

In the same way, the speed afforded by computers allow us to do things that would be unthinkable by hand - like make 3D video games, which are real-time simulations of worlds that exist only as data.

The rest of the chapter is about some impressive mathematical things. I say "impressive" without any snark at all because to do his work, Hamming clearly did a ton of deep research and deep thinking. The result is the "Hamming window."

He’s got some advice on fame: let other people quote and cite you - it looks better and people respect it more!

Chapter 16: Digital filters 3

"We are now ready to consider the systematic design of nonrecursive filters."

Oh, you know it, Hamming! Rub those nonrecursive hams on everything my dude. They are pearls before this swine, but don’t think for a moment that my oinks and grunts are anything but the highest admiration.

Okay, so we’ve got some eigenvalues. And, as you can see, differentiation magnifies.

Gotta truncate that infinite Fourier series. And here’s some pretty graphs! Boy, I really like this single extra tan ink used in the printing of this Stripe edition. Really classy. Is Stripe Press related to Stripe, Inc.? Oh, they are. Kinda wish I didn’t know that.

Ah, the old Gibbs effect from the previous chapter. I didn’t mention that in my notes above, but it was there. I remember. We all remember.

How did Kaiser know to use the exponent 0.4? Hamming asked him. Kaiser said that he tried 0.5 and it was too big. Knowledge plus the ability to use the computer for experimentation!

Okay, here’s a great story and moral from Hamming:

"…it was completely impracticable to do with the equipment I had. Some years later I had an internally programmed IBM 650 and he remarked on it again. All I remembered was it was one of Tukey’s few bad ideas; I completely forgot why it was bad— namely because of the equipment I had at time. So I did not do the FFT, though a book I had already published (1961) shows I knew all the facts necessary, and could have done it easily!

"Moral: when you know something cannot be done, also remember the essential reason why, so later, when the circumstances have changed, you will not say, “It can’t be done”. […] When you decide something is not possible, don’t say at a later date it is still impossible without first reviewing all the details of why you originally were right in saying it couldn’t be done."

(Emphasis mine.)

I have made this exact mistake myself, so I really appreciate this one.

Chapter 17: Digital filters 4

"We now turn to recursive filters, which have the form…"

-

of math. Surprise!

This one has a great analogy/story about adjusting the temperature in a shower when the water pipes have been changed to a larger diameter. This becomes a moment to talk about the problem with experts and things that are taken as fact without question: try not to get stuck in a trap of believing false (or sometimes false) things.

Chapter 18: Simulation 1

I’ve played Sim City, so I’m not sure what else…oh, like Los Alamos nuclear weapon testing.

I think this chapter is great. I know very little about setting up scientific simulations, so it was basically all new to me. The comparison of atomic bomb testing versus weather testing is really interesting: which would you guess is the more stable simulation? The answer makes sense, but might not have been my first guess: weather is less stable - small changes early on can have huge effects later.

Volume of simulation is no substitute for intimate familiarity with the thing being simulated.

Expert jargon is both useful (quickly communicate with other experts) and a curse (if it locks you into rigid thinking or serves excessive gatekeeping). I’ve seen examples of all of this, good and bad, in software engineering.

Even math equations are open to interpretation (Hamming has an example), so it’s vital that a subject expert be part of creating the simulation to make sure it makes sense.

Chapter 19: Simulation 2

Hamming has, rightly, strong words about simulations which do nothing to verify that they are accurately reproducing a useful representation of reality.

Hammings anecdotes remind me of a talk I watched recently by Emery Berger in which he mentioned a paper titled Growth in a Time of Debt (2010) by Reinhart and Rogoff which was based on a massive spreadsheet of data. The paper has been widely cited as the justification for "austerity" measures in Europe (notably Greece, as exemplified by a picture of a building on fire on one of Berger’s slides). When examined by researchers, the spreadsheet was found to be flawed - the correct data and calculations give the opposite result!

There’s also a discussion of analog versus digital computers for simulation. It’s interesting from a historical perspective, but digital is so fast now that analog is rarely even considered - though analog is neat because the horsepower is supplied by real-world physics and results can be basically instantaneous.

Chapter 20: Simulation 3

This chapter starts with the old "Garbage In, Garbage Out" concept. Hamming’s got examples of garbage producing garbage…but also an example of (somewhat) "garbage" data being quite useful.

And then there’s the problem of self-deception. We have double-blind studies because even well-meaning researchers can’t help but to see patterns that reinforce what they want to see.

So it is with simulation. Hamming doesn’t have any easy answers other than to be very careful and intelligent about making sure your simulation is set up correctly and that you’re not fooling yourself.

Chapter 21: Fiber optics

This subject was unexpected, but Hamming explains why he chose it (it was engineering history he was able to observe first-hand from its infancy).

I do find fiber optics fascinating and I know almost nothing about it (other than, like, I get the concept). I didn’t even know that small diameters are chosen because, for reasons you can draw on a piece of paper, the light travels more efficiently, even with lots of bends.

It’s also super fast, harder to tap, less prone to electromagnetic disturbance, and the raw material is one of the most abundant materials on Earth! What’s not to love?

I have no idea where we’re at in terms of making light-based circuits with fiber, but that could be fascinating - a beam of light can cross right through another beam of light, no problem, which is something you cannot do with copper traces.

Here’s a pretty wild bit of "retro-futurism" by Hamming. I’m really curious when he penned this bit. The book was first published in 1996, but this doesn’t really sound like somebody writing in 1996?

I’ll quote these two paragraphs in their entirety without further commentary:

Let me now turn to predictions of the immediate future. It is fairly clear in time “drop lines” from the street to the house (they may actually be buried but are probably still called “drop lines”!) will be fiber optics. Once a fiber optic wire is installed then potentially you have available almost all the information you could possibly want, including TV and radio, and possibly newspaper articles selected according to your interest profile (you pay the printing bill which occurs in your own house). There would be no need for separate information channels most of the time. At your end of the fiber there are one or more digital filters. Which channel you want, the phone, radio or TV can be selected by you much as you do now, and the channel is determined by the numbers put into the digital filter—thus the same filter can be multipurpose if you wish. You will need one filter for each channel you wish to use at the same time (though it is possible a single time sharing filter would be available) and each filter would be of the same standard design. Alternately, the filters may come with the particular equipment you buy.

But will this happen? It is necessary to examine political, economic, and social conditions before saying what is technologically possible will in fact happen. Is it likely the government will want to have so much information distribution in the hands of a single company? Would the present cable companies be willing to share with the telephone company and possibly lose some profit thereby, and certainly come under more government regulation? Indeed, do we as a society want it to happen?

Chapter 22: Computer-aided instruction

I love that Hamming begins his chapter with quote attributed to Euclid that, "there is no royal road to geometry."

Relevant: Just this very morning, I heard this fantastic quote from Jennifer Jacobs (ucsb.edu):

"I guess if you think of programming from the interface or environment perspective, yes, we should make things easy. Important asterisk there, which is: easy without restricting the important forms of power, or expression, that we want to deliver. But if you think of programming as a way of thinking, it is almost not relevant to think about how to make this way of thinking easy. We want to provide pathways into engaging this way of thinking, but you can’t trivialize the difficulty of learning abstraction."

FoC podcast, episode 48 (feelingof.com).

I think Hamming is absolutely right to be skeptical of anything that claims to make people consistently learn faster. I think this is one of those "no silver bullet" things. There are simply no known shortcuts to doing the hard work of thinking and acquiring knowledge.

There are plenty of methods that work, of course. But they all require effort on the part of the learner. Same thing with exercise!

I love his anecdote:

"When I first came to the Naval Postgraduate School in 1976 there was a nice dean of the extension division concerned with education. In some hot discussions on education we differed. One day I came into his office and said I was teaching a weight lifting class (which he knew I was not). I went on to say graduation was lifting 250 pounds, and I had found many students got discouraged and dropped out, some repeated the course, and a very few graduated. I went on to say thinking this over last night I decided the problem could be easily cured by simply cutting the weights in half—the student in order to graduate would lift 125 pounds, set them down, and then lift the other 125 pounds, thus lifting the 250 pounds."

There are absolutely ways that computer simulation can be helpful for training. Neither Hamming nor I question that. (He mentions aircraft simulation for pilot training.) But they’re tools, not shortcuts to learning.

Having said that, I’ve been a fan of Seymour Papert ever since I read his book Mindstorms, and I do believe that computers can/could be hugely helpful in visualizing and making concrete otherwise abstract concepts by letting you play with them. Especially abstract concepts.

I’ll quote again from the FoC podcast episode 48 (because it’s fresh in my mind). Here’s Steve Krouse:

"I was a Logo kid. I was a math-phobe who was exposed to Logo. I learned how to draw a circle. I eventually intuited on my own, “Oh wait, if I just walk up a little bit and turn a little bit, and do that 360 times, I got a circle.” Then when I eventually went to calculus, it was just so easy to understand what a derivative was, because I’ve walked along curves before."

(LOGO was a Seymour Paper project - it’s the language that drove a "turtle" (either robotic or on the screen) that could move and plot lines to draw pictures, etc.)

Chapter 23: Math!!!

I’ve been giving a lot of thought lately to the fact that natural language, while I LOVE it, has limitations in expressing precise intent. Mathematics is, in some ways, an attempt to create a truly perfect way of describing things.

I really respect Hamming’s line of thinking in this chapter and I think this, more than any of this impressive achievements, is where I get a glimpse of the depth of his thinking. Here, Hamming gets philosophical.

Hamming says that we rarely talk about what math actually is, but we know it’s useful anyway.

He’s very human in this chapter: "I had to see an analogy between parts of the problem and a mathematical structure which at the start I barely understood."

Not everything we know can be put into words. We can describe things with words, but those words are not a replacement for the actual thing. Hamming even suggest that human language may go "beyond" the limits of Gödel’s incompleteness theorems.

I’ll be honest, this chapter had me completely re-thinking how I’d approached the earlier chapters.

Chapter 24: Quantum mechanics

Starting with this chapter, the rest of this ridiculous "review" really will be shorter because I read the rest of the book in one go, then re-read the last chapters and wrote my notes in pen in a spiral notebook, limiting the word count to what I was willing to write in ink.

Here Hamming dives even further into philosophy. But first, he gives an introduction to quantum mechanics that even I could understand.

His conclusions make sense to me, even if it’s just to admit that there’s a lot we don’t understand about how the Universe works!

Chapter 25: Creativity

Great observation that some of the most valuable creativity is putting two or more existing things together. This is yet another reason Hamming implores us to study widely so that we can be on the lookout for insights that cross subject boundaries. He has several personal anecdotes in the book that back this up.

Another observation is that great creative work tends to come from a period of intense thinking followed by a temporary abandonment, allowing the subconcious to work on it. Many of these insights turn out to be false starts (I’ve experienced this - dreaming up solutions that seemed elegant and brilliant upon waking, but after trying it out or even just thinking about it over breakfast, realizing they’re complete nonsense).

I like Hamming’s list of questions he’ll ask himself when stuck. They remind me of Eno and Schmidt’s ledgendary "Oblique Strategies" card deck.

I thought this was interesting. Hamming repeats the old observation that the big accomplishments in many fields were performed when the scientist or inventor was young. But he also has some counter examples from other fields:

-

Accomplishments while young: Math, theoretical physics, astrophysics

-

Accomplishments when older: Music composition, literature, statesmanship

(It may seem strange to some people for an engineer to talk about creativity. But we, you and me, are not "some people". We know how vital creativity is to making progress in math, physical science, engineering, and computer science.)

Chapter 26: Experts

Put simply, Hamming sees experts as a drag on progress.

"What you did to become successful is likely to be counter-productive at a later date."

I wonder where I fit in this chapter. I don’t feel like an expert in anything, but at 25+ years in the trenches in this field, I certainly qualify as an "old hand" at it. I probably am an expert by just about any measure in a few specific things, but the enormity of the programming field keeps me feeling like a rank beginner most of the time. In terms of this chapter, I think Hamming would see that as a very good thing.

Am I not "keeping up" in my field? On the one hand, I am an "AI" hater. (I can say that now because I’m increasingly realizing that "AI" is a blanket term that has never been defined. I can hate "AI" and still be fine with the useful bits of what we were calling "Machine Learning" just a handful of years ago.)

On the other hand, as "everyone else" in software appears to be losing their minds, I’m sticking with my slow-and-steady method of learning the fundamentals and building little projects to apply them. If that’s not "keeping up", then so be it!

It’s depressing to think that this might mean that in Hamming’s eyes, I’m like one of those "experts", clinging to the old ways, impeding progress.

Hamming, if you’re out there, press my fingers and type out the answer on my keyboard from beyond the grave. I’ll just wait here for a moment with my eyes closed, waiting for you to give me a sign… Dang. Nothing, not even a twitch.

Anyway, so be it. And anyway, it doesn’t matter. If his opinion matched mine, I would feel validated and if it didn’t, I would be disappointed. But it wouldn’t prove anything. Just two different opinions.

I can’t say I disagree with the basic premise:

TODO: "iconoclasts who…" quote goes here

In the end, Hamming is trying to make us think about our responses to things. To not just go with our first reactions and assume they’re correct, but to take a deep breath and think about what we think we know. And that’s definitely advice I can agree with.

Chapter 27: Unreliable data

Hamming has a bunch of great anecdotes about bad data and it’s both enlightening and entertaining.

Truth be told, this chapter is what I imagined the whole book would be like.

It’s a dark commentary about the software industry that bad data is probably the least of our current problems. Bad data (and/or "category errors") are a problem, but I’ll feel pretty good when that’s something we can agree to concentrate on again. I feel like all known good practices we used to agonize over and debate endlessly have been thrown completely out of the window between the cursed years 2024 to 2025 (I’m typing this at the beginning of 2026 and the fever is worse than ever and I’ve never felt more dismal about the software industry. I wouldn’t recommend it to anyone.) Until we get out of this state, I can’t even be bothered to think about data. Sorry, but that’s how I feel.

Uh, I did enjoy this chapter, though. It was good.

Chapter 28: Systems engineering

This chapter is great. Hamming’s point is that optimizing parts of a system can come at the expense of the whole. Systems engineering, then, is about engineering the system as a whole.

I really like Hamming’s education examples. We tend to optimize our courses to focus on certain specifics, but we seldom put as much care in the learning as a whole. I think this applies not just to institutions, but also the way we steer our own education. When’s the last time you thought about the long-term path of your learning outside of school? (If you’re like me, maybe it was recently. But I don’t think that’s typical. And for me, that’s only been in the last 5-10 years. Prior to that, it was completely scattershot.)

A work example that I feel in my bones comes from Hamming’s reading of a series of Bell Telephone Laboratories essays by H.R.Westerman in 1975. Namely, a team can’t always be "putting out fires" or they can’t keep their skills honed.

Building a system requires deep insights. I love that Hamming points out that angst powers many improvements. It requires the engineers who know the system best to be empowered to fix the things that give them the most angst. Great improvements in software come, more often than not, from a developer who is just sick of a crappy process and sees a better way to do it. This also implies that the engineers are actually using the system as well! (We call that "dog-fooding" as in, "eating the dog food you make to prove that it’s good.")

By contrast, the client (and by extension, client-facing management) tend to know the symptoms of a problem, but the deeply invested engineer knows the underyling issue.

Hamming describes systems engineering as, "trying to solve the right problem," (emphasis mine) and I think that’s an excellent way of describing it.

I also agree with him that this is one of the most under-taught and hard-to-teach subjects.

Chapter 29: You get what you measure

This chapter goes hand-in-hand with the previous one and the one before that about unreliable data, but it is not redundant. This is about the unintended consequences of optimizing for the wrong thing entirely.

Hamming has a great example from education (again): Weeding out students who aren’t great at the early stages of mathematics may be negatively correlated with people who will be good at abstract reasoning in the later stages - which is the real goal.

There are often perverse incentives at a school or company which punish the very thing they claim to want.

Hamming uses the perfect example from my own industry as an example: Measuring programmer productivity by lines of code produced. It’s like rating restaurants by how fast they can shovel food into a bucket or artists by how many square feet of canvas they can fill in a day.

In retrospect, I think this chapter also ties in perfectly with the "micromanagement" section way back in Chapter 2. That is, micromanaging the work will tend, at the average company, to reward hitting deadlines and churning out work at the expense of all else.

Chapter 30: You and your research

At last, we have arrived at the final chapter, which is the title of Hamming’s talk that inspired this book.

My summary:

-

It is worth trying to accomplish goals you set yourself.

-

It is worth setting high goals.

It is easy to balk at Hamming’s actual wording. Terms like "great work" and "greatness" appear a lot.

But revisiting the talk (which I had originally read in the form of an IEEE article) in this chapter, I see what was missing the first time: Hamming is saying that you should do things that are important to you! That’s a big difference.

In other words, it’s good to have a personal goal that is as lofty as you can bear to make it and pursue it.

Even better, Hamming clearly understands that brains come in many forms and so does luck. Raw brainpower isn’t everything. But you can only accomplish your great things if you’re actually trying. So the first step is to identify and pursue those things.

Another important thing to keep in mind, as Hamming points out, is that sometimes bad conditions are just what you need to make significant discoveries - "necessity is the mother of invention" and all that.

Spend 10% of your time on the big-picture thoughts and research and learning. It will pay off with compound interest.

Have a collection of problems you’d like to solve so when new ideas come, you’re ready with different areas to apply them.

Give credit to others. It always pays off and respect is increased all around. You will never diminish your own contribution by crediting others with theirs.

Truly, I think, these are great words to live by.